In this blog post , We will implement a Lambda function which will notify the changes that occur in the S3 bucket.

S3 bucket operations such as Upload , delete, etc can be monitored by the lambda function which will then be logged in the Cloudwatch Logs.

These logs can be used for analysis and alerting mechanisms.

Table Of Contents

- Creating S3 Bucket

- Creating IAM Role

- Setup Lambda functions

AWS Services Used for this Implementation:

- Lambda Function

- S3 Bucket

- Cloudwatch Logs

Let’s start by creating a S3 bucket.

Creating S3 Bucket

To create an S3 bucket , Login to the S3 console.

And then choose the Region where the bucket should be created.

![]()

Leave the other settings to Default.

Click Create bucket

![]()

We have created a S3 bucket.

Creating I am Role

We need to create an IAM Role with permissions to AWS services such as S3 bucket , Lambda and Cloudwatch Logs , Which will be used when creating the Lambda function.

To create an IAM Role , Login to the IAM Console.

From the navigation pane , Choose Roles.Click Create role

Choose AWS service as trusted entity and Lambda as use case and click Next: Permissions

![]()

Search for lambda and choose AWSLambda_FullAccess policy

![]()

Search for Cloudwatch and choose CloudwatchFullAccess policy![]()

Search for S3 and Choose AmazonS3FullAccess policy

![]()

The required policies are selected , click Next: Tags

Provide a name for the IAM Role and then click Create role

Note : The policies that are attached with the IAM role grants full permission for Cloudwatch ,S3 and Lambda.

We can create a custom policy which grants permission to required aws services for security reasons.

Creating Lambda Function

To create a Lambda function , Login to the AWS Lambda console.

Click Create function , Choose Author from scratch

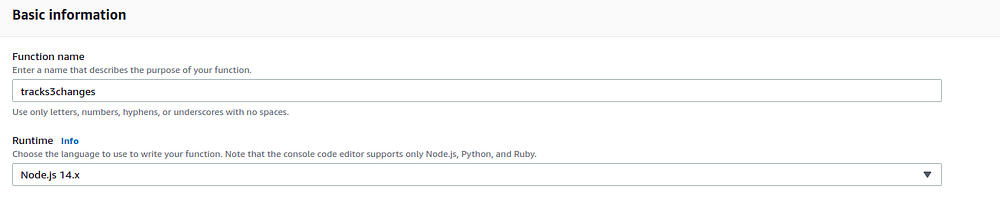

For Basic information , Provide a name for the function

Choose Runtime as Node.js 14.x

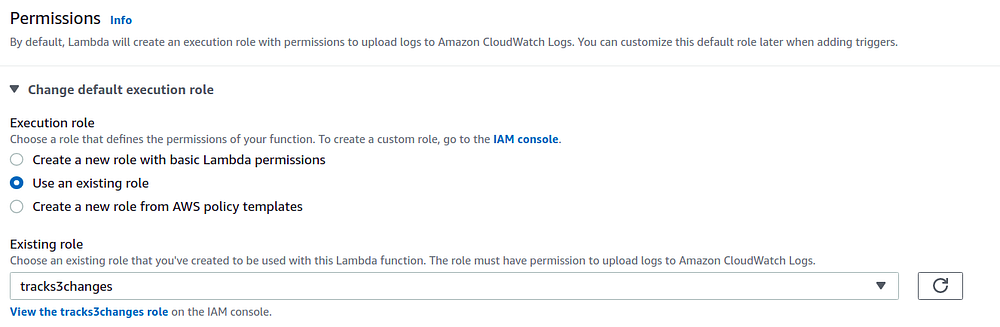

Under Permission , Choose Change default execution role and then click Use an existing role.

Now select the IAM Role that we created in the previous step.

Click Create function

Once the function is created , We need to add a code to the lambda function

Under Code section , Double click index.js , Remove the existing code and add the code attached below and then click Deploy

exports.handler = function(event, context, callback) {

console.log(“Incoming Event: “, event);

const bucket = event.Records[0].s3.bucket.name;

const filename = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ‘ ‘));

const message = `File is uploaded in — ${bucket} -> ${filename}`;

console.log(message);

callback(null, message);

};

The required code is added and deployed.

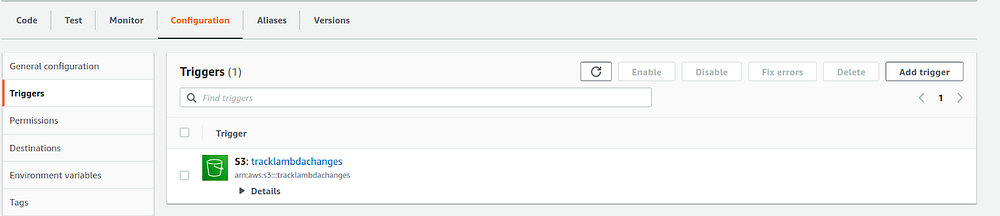

As we are creating the lambda function to monitor the S3 bucket changes , We have to add the respective S3 bucket as a trigger for the Lambda function.

Under Function overview , Choose Add trigger

Under Trigger configuration , Choose S3 ,

Select the S3 bucket that needs to be monitored.

For Event type , You can either choose the specific S3 operations or All object create events , All object delete events , Restore from Glacier etc.

Acknowledge and click Add

Once the S3 is added as a trigger , You can check it under the Configuration → Triggers.

Testing And Verification Of S3 Bucket Changes

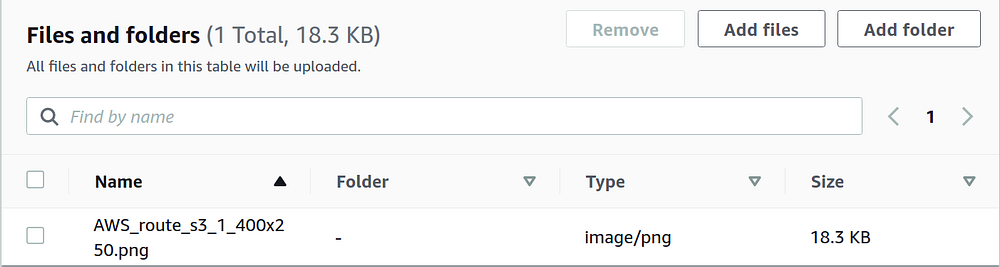

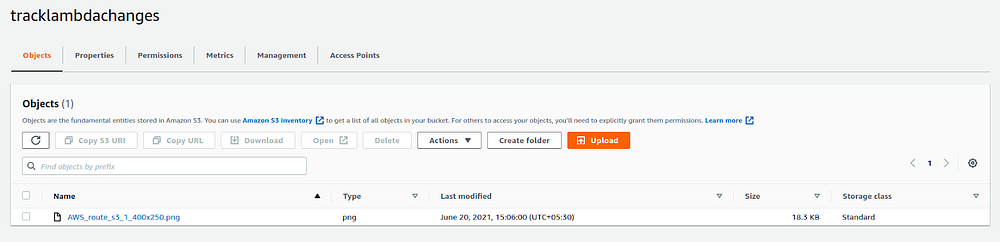

As we have created a S3 trigger for All object create events , Let us upload a file to a S3 bucket and see how it will be tracked by the lambda function.

Go to the S3 Console , Click the S3 bucket.

Click Upload , Click Add files

Upload the files from your system , click upload.

The file is successfully uploaded to the S3 bucket.

Now , This operation of the S3 object creates events such as upload should be tracked by the lambda function and logged in the cloudwatch logs.

To check the same , Login to the Cloudwatch console.

From the Navigation pane , Under Logs , Choose Log groups

You can find the log group that was created by the Lambda function.

The name of the logroup will be the same as the lambda function name.

Choose the Log group and then you can find the log stream. Select it.

You can find the S3 operation that was performed in the S3 bucket.

It also provides the details of the files uploaded , event time , region etc.

So whenever a user uploads a file to the S3 bucket , It will be logged by the lambda function.

These logs can be used for analysis and alerting purposes.

.webp?lang=en-US&ext=.webp)

.webp?lang=en-US&ext=.webp)